This is the fourth article in the Dapr series. The previous one can be found here. If you missed it, please check it out.

Now, I'll begin by apologising for a minor error in my previous blog post. After my brief revelation, we can resume coding. This time, we'll use Dapr in two different microservices to figure out how and why storage works.

I’m sorry

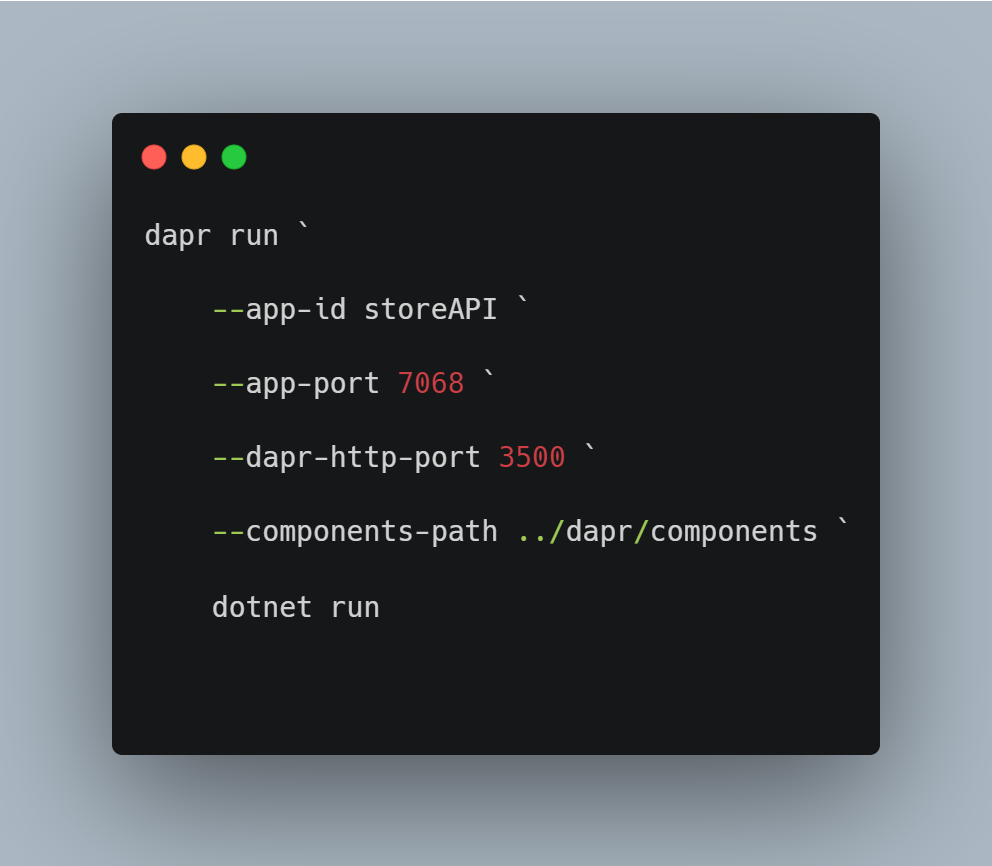

First and foremost, I apologise for the minor error in the preceding blog post. I made a small mistake concerning the port configuration. The -app-port value in runStoreAPI.ps1 should be taken from the project launch configuration. The value in my case is 7068, and the PowerShell file now looks like this:

…and the launchSettings.json looks like this:

Now that the ports match, we can move on to the main topic of this blog post.

Adding the second microservice

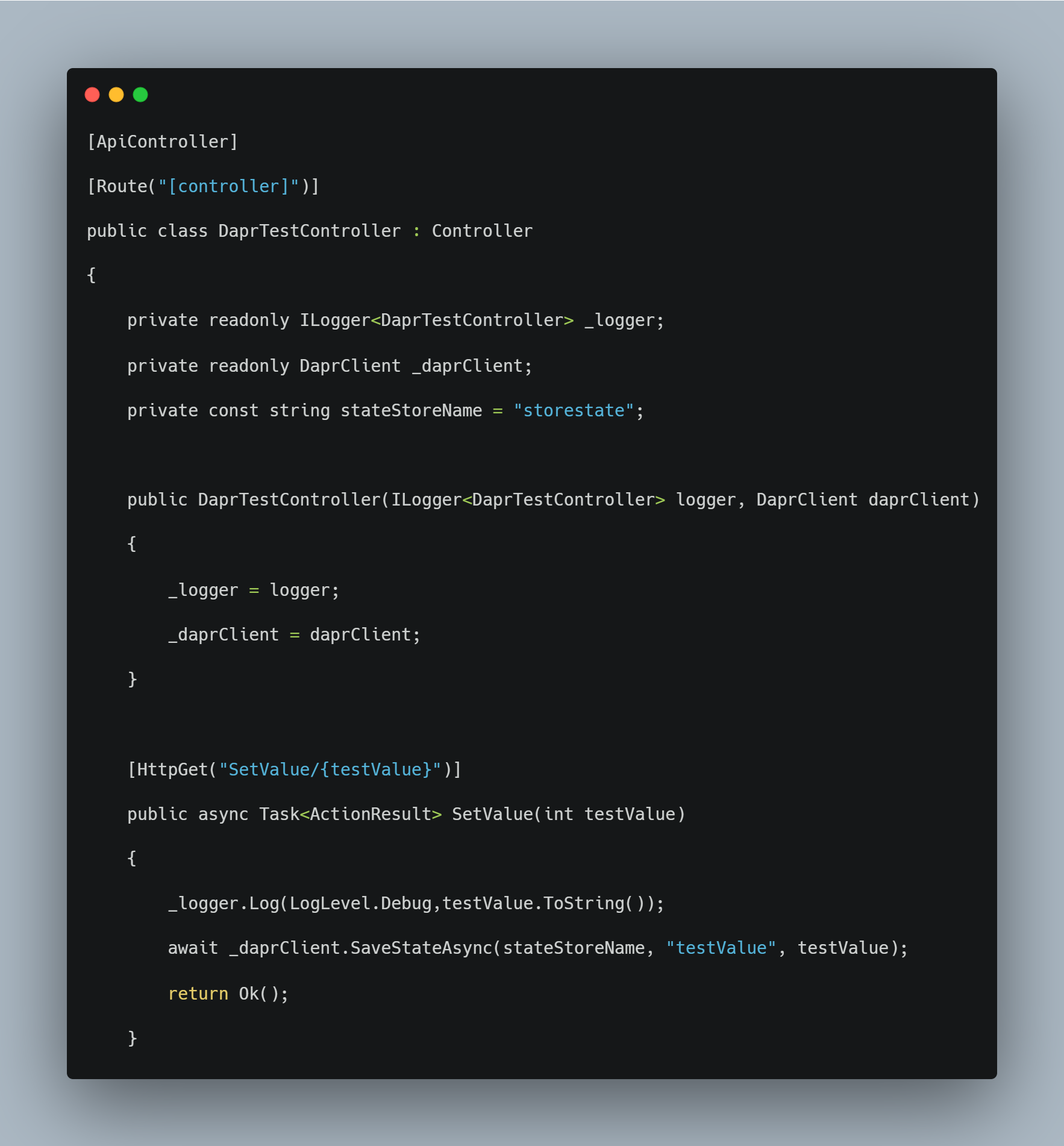

Dapr, as previously stated, was designed to support microservices architecture. Typically, there are multiple services in such architecture. True to that statement, we will add one more service to the solution. It will use the same API that we already have in our solution, and the only difference is that I've given it the name OrderAPI. It contains two crucial components - one is a controller, and it is named DaprTestController.

It has the same code as the previously presented StoreAPI DaprTestController. This approach will allow us to concentrate solely on Dapr mechanisms rather than this example domain logic.

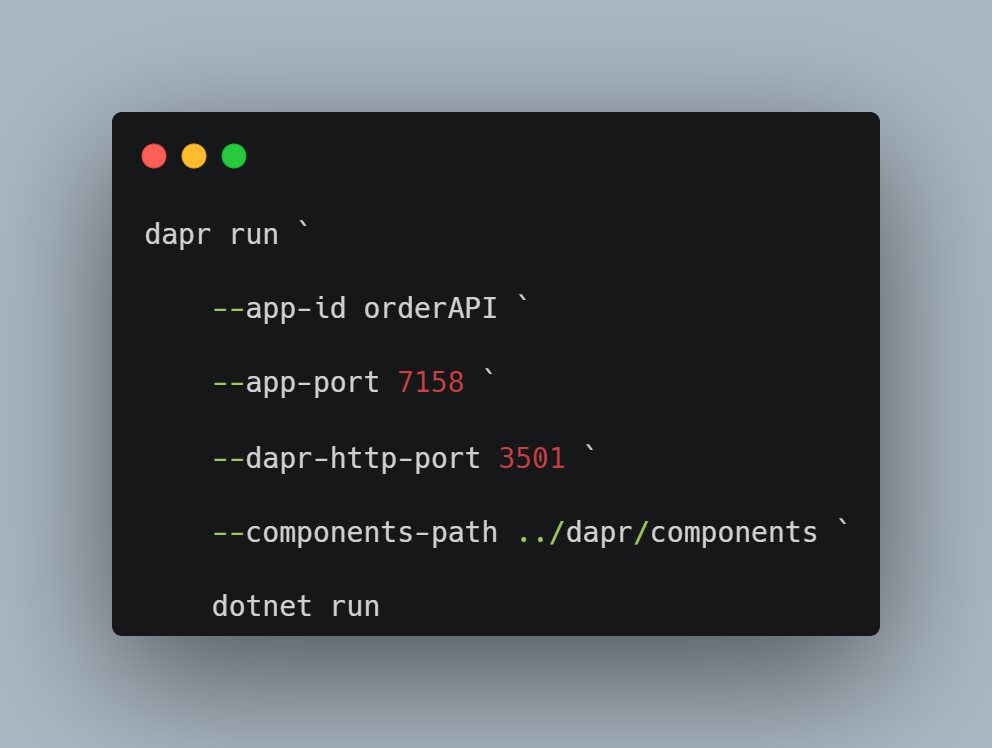

The OrderAPI project also includes a PowerShell script for running the project with the Dapr layer. The script is placed in the file runOrderAPI.ps1.

This file appears simple, but it contains a few noteworthy elements. The -app-port parameter corresponds to the project's lunch port parameter. —dapr-http-port - this port must be unique. It was 3500 in the StoreAPI and is now 3501, because this port is available on my machine. --components-path - This parameter's value should be of interest to you. Specifically, it refers to the same component configuration as the StoreAPI PowerShell run script file. Yes, this means that the two APIs will share the same State Block

Running the project

Please rebuild the containing solution before running the project to make sure everything works. Also, remember to launch Docker, as it is used to run Dapr components.

We'll start by launching two terminal windows. Each API project has its own. We'll be adding them to project directories and running PowerShell script files

Following the execution of these two commands, we can use swagger to execute our API endpoints. Swagger examples can be found at https://localhost:7068/swagger/index.html and https://localhost:7158/swagger/index.html.

Playing around and looking under the hood

At this point, we will put our two APIs to the test. I'm not going to give you a step-by-step procedure - simply experiment with the solution to see if you can find anything interesting.

The fact that the store API and order API do not share values was intriguing to me. Hear me out - they are using the same Dapr component, because that's how we made the run ps1 files, they both use the same value key and in both cases the key is testValue. So, why aren't changes made by one API reflected in the other? This is an excellent question, and the time has come to conduct some IT research.

When there is a problem with the data you extract from the database, you simply query the table to see if the desired data is present. We'll try to apply the same strategy here. Redis is the element we must query, because - as you may recall - this is the background of our store block. However, where is Redis? That is a great question. It is on Docker, and to find it we need to open Docker Desktop and go to the containers tab.

Find the dapr redis container and open its terminal in that tab. You can do it by pressing the button marked.

Inside this terminal please paste the command “for i in $(redis-cli KEYS '*'); do echo $i; redis-cli hgetall $i; done” and hit enter. The results, in my case look like this:

It's easy to see why the values aren't shared across APIs - the answer can be found in the key values. The keys are “orderAPI||testValue” and “storeAPI||testValue”. When working with storage, the Dapr prevents us from mixing up values between projects (microservices) by prefixing keys with project names. This image also shows the key-value pair created in the second Dapr blog post, the current value of the key-value, and which version of value it is.

Summary

We got a lot done today, and you did well if you kept up. We were able to configure and run the Dapr component for two microservices. We also looked under the hood and investigated why the data behaves the way it does on the Redis terminal level.

The next post will discuss data serialisation and deserialization with Dapr, as well as moving Dapr logic away from the controller level.

Till next time. Keep coding.